Concept in Definition ABC

Miscellanea / / July 04, 2021

By Francisco Cano, on Oct. 2014

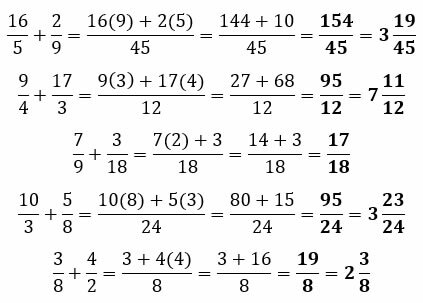

Above the image we can see that a certain combination of zeros and ones is equivalent to 6 within an 8-bit sequence. Below we can see that mathematical operations can be performed with bits, although the result can never be different from 0 or 1.

A bit in computing is considered as a Unit information, informs that something is on or off. It can also be expressed as that by a circuit happens electricity or that does not happen, in essence it is the same as being on or off the circuit. These two states of on and off are called the binary system.

The word or term derives from the English acronym Binary digit (digit of a binary system). There are many counting systems, take as an example the sexagesimal that is used to measure the time in almost everyone. The binary was adopted in computing because a machine does not have the differentiation capacity of a person, it is only capable of distinguishing two states. Currently, work is being done to change that, but there is still a long time for this to be done and for it to be practical for ordinary people.

The binary system is expressed by telling you various combinations of off and on (that is, bits) within an 8-bit sequence.

One may ask, how is it that the system distinguishes that the circuit is off if in the previous sequence it was also off? This is because a bit also has an associated time sequence, if during this sequence (which is very short) nothing happens, It is considered off, if nothing happens to the next one, it is considered off, so on until the 8 time sequences that form a byte are reached and There's one left interpretation to the machine.

In order to get Qbits, you need very complex machines that are very delicate to handle. It is for this reason that the technology of quantum bits or Qbits is still very far from being reflected in the daily reality of the day to day.

Computers give the impression of being able to do things that people find very impressive but due to the limitation that they can only distinguish between two possibilities depend entirely on the programming that they are given. Beyond that programming, computers are only capable of reading it very quickly. For the future there will be so-called Qbits that are capable of combining into one, all possible combinations of 1 and 0 within a single byte. With about 60 Qbites in a computer we would have the same power what if we got together EVERYONE the computers of the planet together.

Topics in Bit