Latency in Computer Networks

Miscellanea / / July 04, 2021

By Guillem Alsina González, on Feb. 2019

As Internet extends and improves its benefits, the applications for which it is used grow. For example, who would think a few years ago that video streaming services would exist?

As Internet extends and improves its benefits, the applications for which it is used grow. For example, who would think a few years ago that video streaming services would exist?

And, as the applications that work on the network of networks grow and new ideas are born for their use, this also drives the improvement of the benefits and the infrastructure of the network.

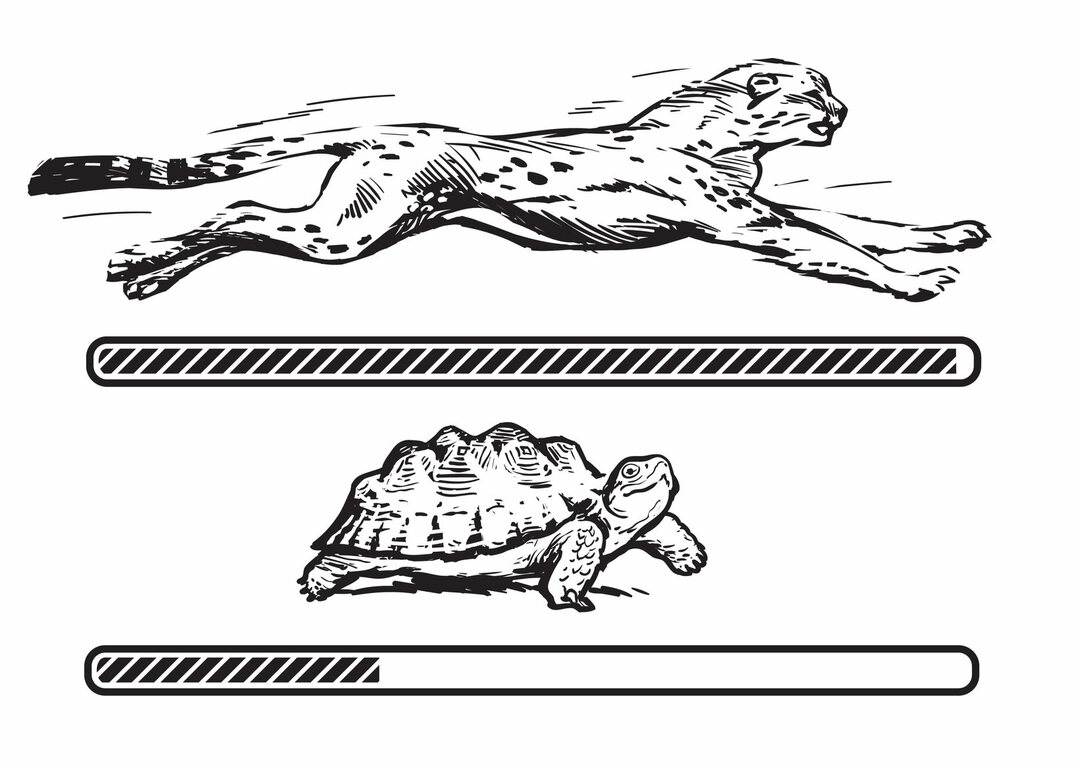

One of the transcendent parameters for good functioning of data networks, including the Internet, is the so-called latency, which must be minimal in the case of multimedia applications such as ultra high definition video streaming (UHD, 4K) or video games (gaming).

We call latency the accumulation of delays within a computer network, due to the successive delays that occur between section and section.

These delays are due to the nature of the environment itself, and although it seems that they should not constitute a serious problem, they are On the order of milliseconds, in a packet-based network such as the Internet, they can lead to the stoppage of a movie, or a videogame.

The latter can be very annoying, since it can lead, for example, that our character is killed in the game or that we lose the game.

The Internet is a packet-based network, which means that the information is chopped up and sent separately, each of the packages is even sent through a different path, depending on the conditions of the net.

This leads each packet to travel a multi-hop path, going from server to server, and from switch on switch or router, until you reach your destination. The handling of these packets in the servers and devices of the network causes very brief retentions of the packet that, added between them, is what we call latency.

The capacity of the intermediate teams that we meet along these “jumps” also influences, since in some with, for example, insufficient outputs, can cause “queues” of packets waiting to be processed.

We are talking, obviously, of very little waiting time, of the order of milliseconds, but that can be lengthened if one of the intermediate points falls and the traffic must be redirected.

A sum of delays that reaches a considerable time for the scale in which we work, can mean that a film stops for fill the video buffer, or the action of a video game freezes or skips, something that may seem insignificant but is very upset.

That is why the operators that have their own network are working on improving the latency of this (which means reducing it so that the information flows continuously), and in offering specialized connections with minimal latency for highly targeted customer profiles, such as die-hard hobbyists. online video games.

Although there is talk of “zero latency”, there will always be some latency, no matter how little it is.

Although it is technically false that there may be zero latency, the latency can be so close to this value that the sensation is that, effectively, there is no latency.

And there will always be something - no matter how little - due to the physical environment itself, which makes it impossible for a information emitted at one point is instantly received at another, even if we are talking about a cable optical fiber in a straight line.

Photo Fotolia: Olena

Issues in Latency in Computer Networks